MediaPipe update 2023

Please note that MediaPipe has seen major changes in 2023 and now offers a

redesigned API.

The code in this posts still works as of

mediapipe==0.10.7. Check out

this post for more details on

the new API.

In 2019, Google open-sourced MediaPipe, a set of

machine learning-based solutions for a variety of computer vision problems.

Although currently still in alpha, the ease of use, speed and

performance of the provided pretrained models is very impressive.

MediaPipe is cross-platform and most of the solutions are available in C++,

Python, JavaScript and even on mobile platforms.

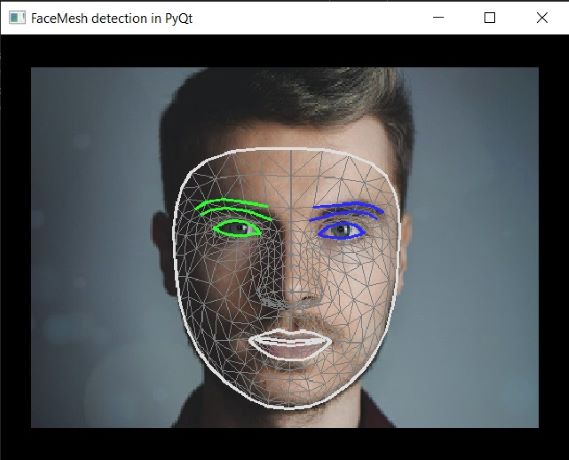

This article illustrates how to apply MediaPipe’s facial landmark detector

(Face Mesh),

how to access landmark coordinates in Python and how to implement Face Mesh in

a live graphical user integrate with PyQt & pyqtgraph.

Installation and minimal working example

MediaPipe’s Python solutions can be installed with pip - check out the

documentation

for more details:

pip install mediapipe

The documentation also features minimal working examples

for all available APIs. The Python examples show how to use FaceMesh in

combination with OpenCV to find and display

facial features for a single image or a continuous webcam stream.

The main functionality is achieved in only three lines of code.

1

2

3

4

5

6

7

8

|

import mediapipe as mp

# ...

# initialize face_mesh with several configuration options

with mp.solutions.face_mesh.FaceMesh(...) as face_mesh:

# process image in RGB

results = face_mesh.process(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

# draw results with different drawing settings

mp.solutions.drawing_utils.draw_landmarks(...)

|

About Face Mesh

Although MediaPipe’s programming interface looks very simple, there are many

things going on under the hood.

Face Mesh utilizes a pipeline of two neural networks to identify the 3D

coordinates of 468(!) facial landmarks — no typo here:

three-dimensional coordinates from a

two-dimensional image. The first network (BlazeFace) computes

face locations from the full image. The second network then operates only on

a cropped region to identify landmark locations. The entire pipeline is built

for speed and requires less than 10 ms on better smartphones.

Accessing landmark coordinates

The results returned by face_mesh.process contain one NormalizedLandmarkList

per face detected in the image. As the name suggests, the landmark coordinates

are normalized (by the image dimensions) to values between 0 and 1.

The landmark list object is a

protobuf message, which

unfortunately does not interact well with the typical Python packages.

To extract the landmark coordinates into a NumPy array of pixel coordinates,

we can use the following function:

1

2

3

4

5

6

7

8

9

|

# import numpy as np

def get_facemesh_coords(landmark_list, img):

"""Extract FaceMesh landmark coordinates into 468x3 NumPy array.

"""

h, w = img.shape[:2] # grab width and height from image

xyz = [(lm.x, lm.y, lm.z) for lm in landmark_list.landmark]

return np.multiply(xyz, [w, h, w]).astype(int)

# call: get_facemesh_coords(results.multi_face_landmarks[0], image)

|

Note that the z coordinate is scaled with the image width (same as x) and that

it is relative to the face’s average distance from the camera (read more in the

docs).

Using the above function, we can obtain the 3D coordinates of the facial

landmarks and further process them in Python. For example, the script below

creates this animation:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

|

import cv2

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.animation import FuncAnimation, PillowWriter

import mediapipe as mp

def get_facemesh_coords(landmark_list, img):

"""Extract FaceMesh landmark coordinates into 468x3 NumPy array.

"""

h, w = img.shape[:2] # grab width and height from image

xyz = [(lm.x, lm.y, lm.z) for lm in landmark_list.landmark]

return np.multiply(xyz, [w, h, w]).astype(int)

image = cv2.imread("<filename>") # read any image containing a face

# Initialize FaceMesh

with mp.solutions.face_mesh.FaceMesh(static_image_mode=True,

refine_landmarks=False,

max_num_faces=1,

min_detection_confidence=0.5

) as face_mesh:

# Convert the BGR image to RGB and process it with MediaPipe Face Mesh.

results = face_mesh.process(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

coords = get_facemesh_coords(results.multi_face_landmarks[0], image)

# plot coordinates. Use image's Y coordinate in z direction.

# needs to be inverted as image coordinates start from top.

# color by distance to camera.

fig = plt.figure(figsize=[4, 4])

ax = fig.add_axes([0, 0, 1, 1], projection='3d')

ax.scatter(coords[:, 0], coords[:, 2], -coords[:, 1], c=coords[:, 2],

cmap="PuBuGn_r", clip_on=False, vmax=2*coords[:, 2].max())

ax.elev = -5

ax.dist = 6

ax.axis("off")

# make sure equal scale is used across all axes. From Stackoverflow

# https://stackoverflow.com/questions/13685386/matplotlib-equal-unit-length-with-equal-aspect-ratio-z-axis-is-not-equal-to

xlim = ax.get_xlim()

ylim = ax.get_ylim()

zlim = ax.get_zlim()

max_range = np.array([np.diff(xlim), np.diff(ylim), np.diff(zlim)]).max() / 2.0

mid_x = np.mean(xlim)

mid_y = np.mean(ylim)

mid_z = np.mean(zlim)

ax.set_xlim(mid_x - max_range, mid_x + max_range)

ax.set_ylim(mid_y - max_range, mid_y + max_range)

ax.set_zlim(mid_z - max_range, mid_z + max_range)

# build animated loop

def rotate_view(frame, azim_delta=1):

ax.azim = -20 - azim_delta * frame

animation = FuncAnimation(fig, rotate_view, frames=360, interval=15)

writer = PillowWriter(fps=15)

animation.save("facemesh_coordinates.gif", writer=writer, dpi=72)

|

Live Face Mesh detection in PyQt

The provided examples work well as standalone scripts, but there are some

modifications needed to integrate the functionality into a bigger application.

In yarppg, which detects the user’s heart rate from webcam video,

Face Mesh is used to identify the region of interest (ROI) for further

processing.

yarppg’s user interface is built with PyQt. The following sections illustrate

how Face Mesh is integrated into the PyQt application.

You can download the complete source code with DownGit.

To facilitate future extensions, we want to follow a modular approach and

decouple processing from the graphical user interface. While this modular setup

requires some additional coding effort, it pays off in the long run.

For a minimal example, we implement the following modules:

| Filename |

Description |

| camera.py |

providing Camera, which wraps the OpenCV input stream inside a PyQt thread. |

| rppg.py |

implementing the orchestrator class RPPG, which interacts with the camera and triggers face mesh processing. |

| mainwindow.py |

providing a custom GUI class derived from QMainWindow. |

| main.py |

launching the application; run the program with python main.py |

Info

In the

next post,

the

RPPG class will be extended to extract a region of

interest from the landmarks and compute an rPPG signal.

First, we need to read the webcam feed. To avoid blocking calls and process

frames while waiting for new inputs, the Camera is implemented as a

QThread. It wraps an OpenCV VideoCapture object, runs an infinite loop and

emits new frames via a pyqtSignal.

Here is the complete code:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

# camera.py

import time

import cv2

import numpy as np

from PyQt5.QtCore import QThread, pyqtSignal

class Camera(QThread):

"""Wraps cv2.VideoCapture and emits Qt signal with frames in RGB format.

The `run` function launches a loop that waits for new frames in the

VideoCapture and emits them with a `frame_received` signal. Calling

`stop` stops the loop and releases the camera.

"""

frame_received = pyqtSignal(np.ndarray)

"""PyQt Signal emitting new frames read from the camera.

"""

def __init__(self, video=0, parent=None):

"""Initialize Camera instance.

Args:

video (int or string): ID of camera or video filename

parent (QObject): parent object in Qt context

"""

super().__init__(parent=parent)

self._cap = cv2.VideoCapture(video)

self._running = False

def run(self):

"""Start loop in thread capturing incoming frames.

"""

self._running = True

while self._running:

ret, frame = self._cap.read()

if not ret:

self._running = False

raise RuntimeError("No frame received")

self.frame_received.emit(cv2.cvtColor(frame, cv2.COLOR_BGR2RGB))

def stop(self):

"""Stop loop and release camera.

"""

self._running = False

time.sleep(0.1)

self._cap.release()

|

The orchestrating class (RPPG)

The main component combines other parts of the application and manages the flow

of information. It receives new frames, calls the face detector and emits the

outputs to display by the user interface.

The frames delivered by the Camera class’s frame_received signal are

processed in the on_frame_received slot.

A container featuring the results is emitted again as a PyQt signal (rppg_updated).

The output container is a named tuple, holding the raw image and the

detected landmarks.

Using a named tuple provides easy extendibility without many larger changes in

the signals and slots interfaces.

1

2

3

4

5

6

7

8

9

10

11

12

|

from collections import namedtuple

RppgResults = namedtuple("RppgResults", ["rawimg", "landmarks"])

class RPPG(QObject):

# ...

def on_frame_received(self, frame):

"""Process new frame - find face mesh and emit outputs.

"""

rawimg = frame.copy()

results = self.detector.process(frame)

self.rppg_updated.emit(RppgResults(rawimg, results))

|

In this implementation, the detector is a bare Face Mesh instance.

Note that using Face Mesh through a context manager (as is done in the

documentation) inside of

on_frame_received comes with considerable performance issues, because a new

instance would be initialized for every call. Looking into the context

manager implementation, we see that the only additional

code is ‘closing’ the instance upon exit:

539

540

541

542

543

544

545

546

|

# taken from https://github.com/google/mediapipe/

def __enter__(self):

"""A "with" statement support."""

return self

def __exit__(self, exc_type, exc_val, exc_tb):

"""Closes all the input sources and the graph."""

self.close()

|

Thus, we need to call detector.close() before finishing up.

Below is the complete code of the RPPG orchestrator in rppg.py:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

|

# rppg.py

from collections import namedtuple

import numpy as np

from PyQt5.QtCore import pyqtSignal, QObject

import mediapipe as mp

from camera import Camera

RppgResults = namedtuple("RppgResults", ["rawimg", "landmarks"])

class RPPG(QObject):

rppg_updated = pyqtSignal(RppgResults)

def __init__(self, parent=None, video=0):

"""rPPG model processing incoming frames and emitting calculation

outputs.

The signal RPPG.updated provides a named tuple RppgResults containing

- rawimg: the raw frame from camera

- landmarks: multiface_landmarks object returned by FaceMesh

"""

super().__init__(parent=parent)

self._cam = Camera(video=video, parent=parent)

self._cam.frame_received.connect(self.on_frame_received)

self.detector = mp.solutions.face_mesh.FaceMesh(

max_num_faces=1,

refine_landmarks=False,

min_detection_confidence=0.5,

min_tracking_confidence=0.5

)

def on_frame_received(self, frame):

"""Process new frame - find face mesh and emit outputs.

"""

rawimg = frame.copy()

results = self.detector.process(frame)

self.rppg_updated.emit(RppgResults(rawimg, results))

def start(self):

"""Launch the camera thread.

"""

self._cam.start()

def stop(self):

"""Stop the camera thread and clean up the detector.

"""

self._cam.stop()

self.detector.close()

|

The PyQt GUI (MainWindow)

Finally, we connect the logical component to the user interface.

This demo only implements a minimal interface that shows the camera feed and the

facial landmarks found with Face Mesh.

In the constructor, RPPG’s rppg_updated signal is connected to the on_rppg_updated

method, which renders the output to screen.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

class MainWindow(QMainWindow):

def __init__(self, rppg):

"""MainWindow visualizing the output of the RPPG model.

"""

super().__init__()

rppg.rppg_updated.connect(self.on_rppg_updated)

self.init_ui()

def on_rppg_updated(self, output):

"""Update UI based on RppgResults.

"""

img = output.rawimg.copy()

draw_facemesh(img, output.landmarks, tesselate=True, contour=True)

self.img.setImage(img)

|

Here, draw_facemesh is a convenient wrapper around the default drawing

functions provided with MediaPipe’s Face Mesh. See the full implementation:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

|

# mainwindow.py

from PyQt5.QtWidgets import QMainWindow

import pyqtgraph as pg

import mediapipe as mp

mp_drawing = mp.solutions.drawing_utils

mp_drawing_styles = mp.solutions.drawing_styles

mp_face_mesh = mp.solutions.face_mesh

class MainWindow(QMainWindow):

def __init__(self, rppg):

"""MainWindow visualizing the output of the RPPG model.

"""

super().__init__()

rppg.rppg_updated.connect(self.on_rppg_updated)

self.init_ui()

def on_rppg_updated(self, output):

"""Update UI based on RppgResults.

"""

img = output.rawimg.copy()

draw_facemesh(img, output.landmarks, tesselate=True, contour=True)

self.img.setImage(img)

def init_ui(self):

"""Initialize window with pyqtgraph image view box in the center.

"""

self.setWindowTitle("FaceMesh detection in PyQt")

layout = pg.GraphicsLayoutWidget()

self.img = pg.ImageItem(axisOrder="row-major")

vb = layout.addViewBox(invertX=True, invertY=True, lockAspect=True)

vb.addItem(self.img)

self.setCentralWidget(layout)

def draw_facemesh(img, results, tesselate=False,

contour=False, irises=False):

"""Draw all facemesh landmarks found in an image.

Irises are only drawn if the corresponding landmarks are present,

which requires FaceMesh to be initialized with refine=True.

"""

if results is None or results.multi_face_landmarks is None:

return

for face_landmarks in results.multi_face_landmarks:

if tesselate:

mp.solutions.drawing_utils.draw_landmarks(

image=img,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_TESSELATION,

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles

.get_default_face_mesh_tesselation_style())

if contour:

mp.solutions.drawing_utils.draw_landmarks(

image=img,

landmark_list=face_landmarks,

connections=mp.solutions.face_mesh.FACEMESH_CONTOURS,

landmark_drawing_spec=None,

connection_drawing_spec=mp.solutions.drawing_styles

.get_default_face_mesh_contours_style())

if irises and len(face_landmarks) > 468:

mp.solutions.drawing_utils.draw_landmarks(

image=img,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_IRISES,

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles

.get_default_face_mesh_iris_connections_style())

|

Final application

The minimal program can be run with a short launching script using

python main.py:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

# main.py

from PyQt5.QtWidgets import QApplication

from mainwindow import MainWindow

from rppg import RPPG

if __name__ == "__main__":

app = QApplication([])

rppg = RPPG(video=0, parent=app)

win = MainWindow(rppg=rppg)

win.show()

rppg.start()

app.exec_()

rppg.stop()

|

The final application looks something like below. Of course, there is still

more to do to detect the heart rate from the person sitting in front of the

camera, as is done in yarppg. More on that will follow in the next

post(s).

The full source code can be downloaded with DownGit.

The full source code can be downloaded with DownGit.

(References)

The full source code can be downloaded with DownGit.

The full source code can be downloaded with DownGit.