MediaPipe update 2023

Please note that MediaPipe has seen major changes in 2023 and now offers a

redesigned API.

The code in this posts still works as of

mediapipe==0.10.7. Check out

this post for more details on

the new API.

With every heartbeat, the color of your skin changes slightly. While this effect

is invisible to the human eye, a normal camera is able to pick up these tiny

differences. This means that a regular webcam can be used to measure a person’s

heart rate. In scientific literature, this process is most commonly referred to

as remote photoplethysmography (rPPG).

Most rPPG solutions consist of three components: region of interest (ROI)

identification, pulse signal extraction and heart rate calculation.

In this post, we will tackle ROI identification and pulse signal extraction,

with a simplified version of the method proposed by Li et al., which I

implemented in yarppg.

In the approach demonstrated here, the face is detected with

Face Mesh and the average green channel of the lower face

is extracted as the pulse signal.

Region of interest (ROI) identification

Typically, the face is chosen as the ROI and a pulse signal is computed from the

average color inside the region. Other approaches detect skin in general rather

than faces or consider periodic head movements. A thorough overview of different

computer vision approaches is given by Rouast et al.

In the previous post,

I demonstrated how MediaPipe’s Face Mesh is applied in

yarppg to find faces in webcam video.

In the minimalistic version from before, an orchestrator class (RPPG) processes

new frames from the camera in on_frame_received and emits a named tuple with

the raw image and the Face Mesh output. You can download the full code from the

previous post with DownGit.

36

37

38

39

40

41

42

|

def on_frame_received(self, frame):

"""Process new frame - find face mesh and emit outputs.

"""

rawimg = frame.copy()

results = self.detector.process(frame)

self.rppg_updated.emit(RppgResults(rawimg, results))

|

We can extend this work to extract a heartbeat signal from the changing

skin color. Following the suggestion by Li et al., only the lower half

of the face will be used as the ROI. This is where we need to access the

actual face mesh coordinates with the get_facemesh_coords function introduced

in the last post.

To do this, the Face Mesh detector is wrapped in a ROIDetector class.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

class ROIDetector:

_lower_face = [200, 431, 411, 340, 349, 120, 111, 187, 211] # mesh indices

# def __init__ ...

def process(self, frame):

"""Find single face in frame and extract lower half of the face.

"""

results = self.face_mesh.process(frame)

point_list = []

if results.multi_face_landmarks is not None:

coords = get_facemesh_coords(results.multi_face_landmarks[0], frame)

point_list = coords[self._lower_face, :2] # :2 -> only x and y

roimask = fill_roimask(point_list, frame)

return roimask, results

|

After calling the Face Mesh detector, 9 of the 468 landmark locations are

extracted to define the ROI around the lower face. fill_roimask fills

a binary mask with the contour spanned by these points.

Here is the complete source code for the detector class.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

|

# detector.py

import cv2

import mediapipe as mp

import numpy as np

def get_facemesh_coords(landmark_list, img):

"""Extract FaceMesh landmark coordinates into 468x3 NumPy array.

"""

h, w = img.shape[:2] # grab width and height from image

xyz = [(lm.x, lm.y, lm.z) for lm in landmark_list.landmark]

return np.multiply(xyz, [w, h, w]).astype(int)

def fill_roimask(point_list, img):

"""Create binary mask, filled inside contour given by list of points.

"""

mask = np.zeros(img.shape[:2], dtype="uint8")

if len(point_list) > 2:

contours = np.reshape(point_list, (1, -1, 1, 2)) # expected by OpenCV

cv2.drawContours(mask, contours, 0, color=255, thickness=cv2.FILLED)

return mask

class ROIDetector:

"""Identifies lower face as region of interest.

"""

_lower_face = [200, 431, 411, 340, 349, 120, 111, 187, 211] # mesh indices

def __init__(self):

"""Initialize detector (Mediapipe FaceMesh).

"""

self.face_mesh = mp.solutions.face_mesh.FaceMesh(

max_num_faces=1,

refine_landmarks=False,

min_detection_confidence=0.5,

min_tracking_confidence=0.5

)

def process(self, frame):

"""Find single face in frame and extract lower half of the face.

"""

results = self.face_mesh.process(frame)

point_list = []

if results.multi_face_landmarks is not None:

coords = get_facemesh_coords(results.multi_face_landmarks[0], frame)

point_list = coords[self._lower_face, :2] # :2 -> only x and y

roimask = fill_roimask(point_list, frame)

return roimask, results

def close(self):

"""Finish up (close Face Mesh instance).

"""

self.face_mesh.close()

|

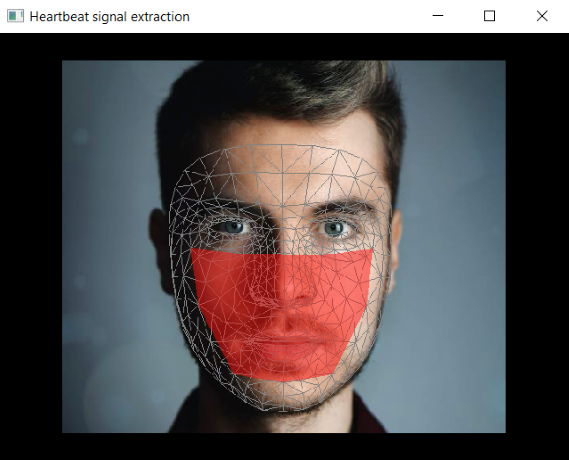

The detected ROI will look something like this:

About landmark indices

Finding the Face Mesh indices of the lower face took some “reverse engineering”.

This notebook

in the yarppg repository provides more insights into the landmark indices.

According to literature, the green channel provides the strongest pulsatile

signal.

This is due to the fact, that hemoglobin better absorbs green

light.

With the ROI specified as a binary mask, extracting the average green channel

of the lower face comes almost for free, as a masked mean calculation is

a feature of OpenCV. The orchestrator’s on_frame_received is extended to

perform the calculation:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

# inside __init__:

self.detector = ROIDetector()

self.signal = []

def on_frame_received(self, frame):

"""Process new frame - find face mesh and extract pulse signal.

"""

rawimg = frame.copy()

roimask, results = self.detector.process(frame)

r, g, b, a = cv2.mean(rawimg, mask=roimask)

self.signal.append(g)

self.rppg_updated.emit(RppgResults(rawimg=rawimg, roimask=roimask,

landmarks=results, signal=self.signal))

|

In order to allow the UI to display the extracted signal, a reference to the

the internal signal list is emitted inside the RppgResults tuple.

Here is the complete code for the modified RPPG class:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

|

# rppg.py

from collections import namedtuple

from PyQt5.QtCore import pyqtSignal, QObject

import cv2

from camera import Camera

from detector import ROIDetector

RppgResults = namedtuple("RppgResults", ["rawimg",

"roimask",

"landmarks",

"signal",

])

class RPPG(QObject):

rppg_updated = pyqtSignal(RppgResults)

def __init__(self, parent=None, video=0):

"""rPPG model processing incoming frames and emitting calculation

outputs.

The signal RPPG.updated provides a named tuple RppgResults containing

- rawimg: the raw frame from camera

- roimask: binary mask filled inside the region of interest

- landmarks: multiface_landmarks object returned by FaceMesh

- signal: reference to a list containing the signal

"""

super().__init__(parent=parent)

self._cam = Camera(video=video, parent=parent)

self._cam.frame_received.connect(self.on_frame_received)

self.detector = ROIDetector()

self.signal = []

def on_frame_received(self, frame):

"""Process new frame - find face mesh and extract pulse signal.

"""

rawimg = frame.copy()

roimask, results = self.detector.process(frame)

r, g, b, a = cv2.mean(rawimg, mask=roimask)

self.signal.append(g)

self.rppg_updated.emit(RppgResults(rawimg=rawimg,

roimask=roimask,

landmarks=results,

signal=self.signal))

def start(self):

"""Launch the camera thread.

"""

self._cam.start()

def stop(self):

"""Stop the camera thread and clean up the detector.

"""

self._cam.stop()

self.detector.close()

|

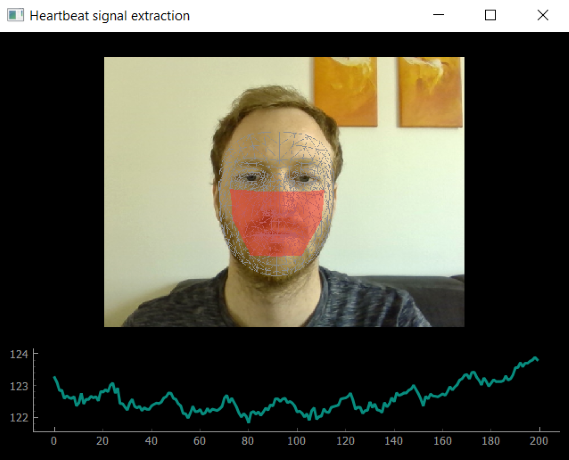

In a stationary setting (no movement and no lighting changes) the unprocessed

green channel should be more than enough to obtain a solid heartbeat signal.

Several more sophisticated signal extraction techniques have been suggested

to deal with challenges that arise in less constrained environments (see Rouast

et al.).

Visualizing the pulse signal with pyqtgraph

Now, the extracted pulse signal can be presented in the user interface.

The pyqtgraph library provides a fast plotting

solution that integrates well with PyQt. It is much faster compared to matplotlib,

although slightly less convenient.

We add a pyqtgraph plot item below the webcam output.

1

2

3

4

5

6

7

8

|

# import pyqtgraph as pg

def init_ui(self):

layout = pg.GraphicsLayoutWidget()

# ...

self.plot = layout.addPlot(row=1, col=0)

self.line = self.plot.plot(pen=pg.mkPen("#078C7E", width=3))

self.setCentralWidget(layout)

|

Live plotting of the signal can be achieved with one additional line

of code in the on_rppg_updated method:

1

2

3

|

def on_rppg_updated(self, output):

# ...

self.line.setData(y=output.signal[-200:])

|

Note that only the last 200 elements in the signal list are plotted, which

results in a moving window of around 6-7 seconds at 30 FPS.

Here is the complete code for the main window:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

|

# mainwindow.py

import cv2

from PyQt5.QtWidgets import QMainWindow

import pyqtgraph as pg

import mediapipe as mp

import numpy as np

mp_drawing = mp.solutions.drawing_utils

mp_drawing_styles = mp.solutions.drawing_styles

mp_face_mesh = mp.solutions.face_mesh

pg.setConfigOption("antialias", True)

class MainWindow(QMainWindow):

def __init__(self, rppg):

"""MainWindow visualizing the output of the RPPG model.

"""

super().__init__()

rppg.rppg_updated.connect(self.on_rppg_updated)

self.init_ui()

def on_rppg_updated(self, output):

"""Update UI based on RppgResults.

"""

img = output.rawimg.copy()

draw_facemesh(img, output.landmarks, tesselate=True, contour=False)

img = draw_roimask(img, output.roimask, color=(221, 43, 42))

self.img.setImage(img)

self.line.setData(y=output.signal[-200:])

def init_ui(self):

"""Initialize window with pyqtgraph image view box in the center.

"""

self.setWindowTitle("Heartbeat signal extraction")

layout = pg.GraphicsLayoutWidget()

self.img = pg.ImageItem(axisOrder="row-major")

vb = layout.addViewBox(invertX=True, invertY=True, lockAspect=True)

vb.setMinimumSize(320, 320) # force webcam view to be taller than plot

vb.addItem(self.img)

self.plot = layout.addPlot(row=1, col=0)

self.line = self.plot.plot(pen=pg.mkPen("#078C7E", width=3))

self.setCentralWidget(layout)

def draw_roimask(img, roimask, weight=0.5, color=(255, 0, 0)):

"""Highlight region of interest with specified color.

"""

overlay = img.copy()

overlay[roimask != 0, :] = color

outimg = cv2.addWeighted(img, 1-weight, overlay, weight, 0)

return outimg

def draw_facemesh(img, results, tesselate=False,

contour=False, irises=False):

"""Draw all facemesh landmarks found in an image.

Irises are only drawn if the corresponding landmarks are present,

which requires FaceMesh to be initialized with refine=True.

"""

if results is None or results.multi_face_landmarks is None:

return

for face_landmarks in results.multi_face_landmarks:

if tesselate:

mp.solutions.drawing_utils.draw_landmarks(

image=img,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_TESSELATION,

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles

.get_default_face_mesh_tesselation_style())

if contour:

mp.solutions.drawing_utils.draw_landmarks(

image=img,

landmark_list=face_landmarks,

connections=mp.solutions.face_mesh.FACEMESH_CONTOURS,

landmark_drawing_spec=None,

connection_drawing_spec=mp.solutions.drawing_styles

.get_default_face_mesh_contours_style())

if irises and len(face_landmarks) > 468:

mp.solutions.drawing_utils.draw_landmarks(

image=img,

landmark_list=face_landmarks,

connections=mp_face_mesh.FACEMESH_IRISES,

landmark_drawing_spec=None,

connection_drawing_spec=mp_drawing_styles

.get_default_face_mesh_iris_connections_style())

|

Final application

The main.py script puts all pieces together. It initiates the components and

launches the graphical user interface. This actually did not change since the

last post:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

# main.py

from PyQt5.QtWidgets import QApplication

from mainwindow import MainWindow

from rppg import RPPG

if __name__ == "__main__":

app = QApplication([])

rppg = RPPG(video=0, parent=app)

win = MainWindow(rppg=rppg)

win.show()

rppg.start()

app.exec_()

rppg.stop()

|

The minimal GUI looks as follows, plotting the extracted pulse signal below

the webcam output. You can download the full code here.

The raw signal can be rather noisy due to auto-adjusts of the camera and

inaccuracies in the ROI detection.

The next post will show how a digital filter can be implemented in pure

Python to smooth the live signal.

(References)